Securing the New Era of AI-Driven Operating Systems: A Novice’s Tale

Don’t want to read? Then listen

Imagine a world where you’re interacting with a device (computer, phone, etc.) you no longer need to switch between applications or frequently use your hands for, but instead, you’re using your voice and gestures. Chatting with your device allows you to achieve all the tasks you have in mind. Over time, that device will proactively take more tasks off your hands, completing them effortlessly and enhancing the quality of each output. And weirdly, sounds like Scarlett Johansson

This description offers a glimpse into the remarkable movie “Her,” which I highly recommend if you haven’t seen it yet. It’s the most optimistic portrayal of humanity’s future dynamic with AI.

Today, many cool kids within generative AI (GenAI) are exploring the concept of operating systems. Specifically, they are considering what it would look like if GenAI were the central element of our devices, functioning as an operating system (OS). When playing this scenario out, the possibilities are exciting but also scary.

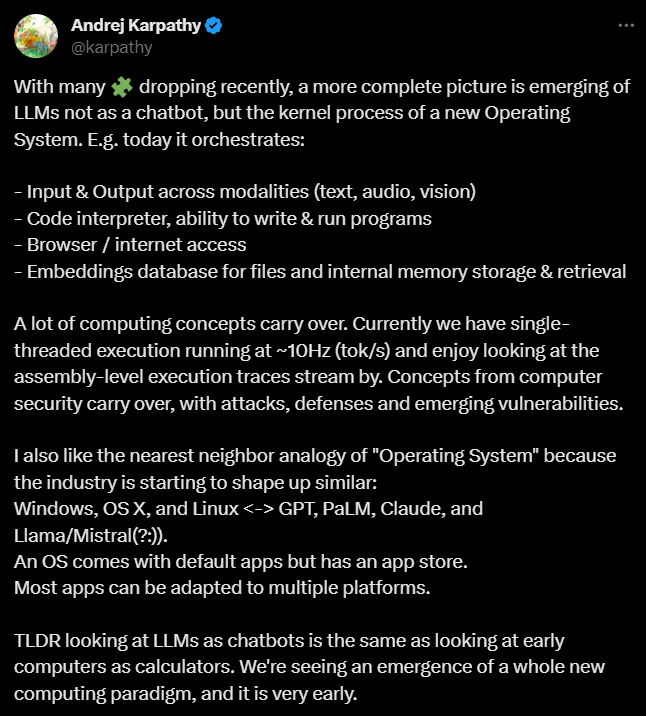

Let’s start from the beginning. In September 2023, Andrej Karpathy (one of the ML prophets) brought widespread attention to the idea of GenAI being at the heart of our future OS. Interestingly, I discovered another smarty pants discussing this concept earlier in April 2023. Between April and September, conversations about the GenAI OS began to merge and intensify.

Two months later, in November 2023, Andrej doubled down on this idea of a GenAI OS by sharing a diagram with some additional thoughts. But before diving into the future GenAI OS, it’s important to understand the purpose of existing operating systems.

Why do operating systems exist?

Let’s start with the fundamental question… What is the purpose of an operating system?

The purpose has evolved (great video on its evolution), but today, its main mission is to serve as the connector between user intent and the actions executed by the hardware and software on the device. An OS is made of different parts, the primary components are – the kernel, memory, external devices (like a mouse and keyboard), file systems, the CPU, and the user interface (UI). There are more, but we’ll keep it simple.

The kernel is at the core of it all, ensuring user applications (everything we interact with) have sufficient compute and memory resources to achieve their job in a reasonable timeframe.

Beyond the kernel, the OS provides several critical functions. It offers a user-friendly interface, typically graphical for most of us normies (not command-line), enabling easy interaction with computers. It also handles the storage, retrieval, and organization of data, making accessing and managing information easier for users and applications. Furthermore, OS’s are crucial for maintaining system security, managing user permissions, and protecting against external threats. Lastly, they communicate with hardware through drivers, translating input like mouse movements into actionable instructions.

Now that we have a basic understanding of an OS, let’s look at the mapping between current and future GenAI OS’s.

Old school OS vs. GenAI OS

We’re heading into uncharted territory, so be warned! Pontificating about a fundamentally new OS will come with some serious assumptions and logical leaps, but I promise most of it makes sense. 😉

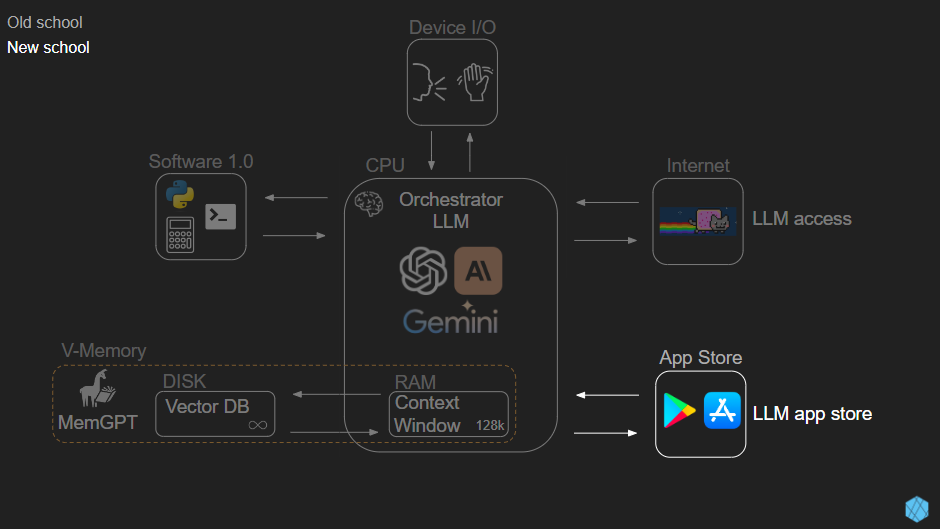

Let’s begin with a bulleted list comparing traditional OS components to their counterparts in a GenAI OS:

- CPU = Orchestrator LLM

- RAM = Context Window

- Virtual memory = MemGPT

- External storage = Vector DB

- Software 1.0 = Python interpreter, calculator, etc.

- App Store = Other finetuned LLMs

- Browser = LLM with internet access

- Devices (mouse/keyboard) = LLM generated voice, text, video, image

Here’s what it all looks like mashed together in modified versions of Adrej’s original diagram (above).

Don’t worry, I’ll walk you through each section. Let’s start with how users will interact with the GenAI-powered OS of the future.

I’m 63.7% confident that gestures and voice will be the primary ways we interact with devices in the future. Our GenAI OS will communicate through voice, video, image, or a blend of these. Imagine an adaptable UI, transforming based on the task, unlike static interfaces like Gmail, Excel, or PowerPoint.

We’re steadily progressing towards this future – the question is, do you see it?

Here’s why this is plausible: Apple’s Vision Pro introduces hand and eye tracking, advancing gesture control. OpenAI’s ChatGPT mobile app, arguably this year’s most underrated launch, brought voice interactions into the spotlight. Finally, the Humane AI Pin, a wearable AI companion, captured the internet’s attention (TED talk).

Once our user interacts with this new GenAI OS, what happens next?

At the core of our, GenAI OS is what I call the “orchestrator LLM”, functioning as both kernel and CPU. Managed by giants like OpenAI, Google, or Anthropic. This centralized role will orchestrate everything – retrieving data, updating memory, accessing the internet, pulling in other fintuned models, or leveraging software 1.0 tools (i.e. calculator). This is the brains of the operation.

A researcher named Beren provides insight that allows interesting comparisons between traditional and GenAI OS CPUs. For example, traditional CPU units are bits in registers, while in GenAI OS, they are tokens in the context window.

Traditional computers gauge performance via CPU operations (FLOPs) and RAM. In contrast, GenAI OS performance, like GPT4, is measured by context length and Natural Language Operations (NLOPs = made-up term) per second. To illustrate, GPT4’s 128K context length is akin to an Apple IIe’s 128KB RAM from 1983. Each generation of about 100 tokens represents one NLOP. GPT4 operates at 1 NLOP/sec, while GPT3.5 turbo achieves 10 NLOPs/sec. Although slower than the billions of FLOPs/sec of CPUs, NLOPs are more complex.

As larger LLMs evolve, we’ll increasingly rely on them, paving the way for autonomous agents. Both Andrej and Anshuman (Hugging Face) speculate future GenAI OS versions will likely have autonomous agents working on our behalf to achieve macro tasks and self-improving through tasks that have reinforcement learning available. Crazy, I know!

We don’t have time to get into future versions of this GenAI OS today, but that could be an interesting future blog post.

In both traditional and GenAI OSs, we’ll need memory. The most common setup for memory within OS is RAM and disk storage. But what does that mean? Simply put, RAM sits inside our working memory (internal storage), making it easy to access quickly, but is limited in size. Disk storage is external and takes more time to retrieve, but it has more space for storing information.

Our GenAI OS will have a similar setup. The RAM is our context window, currently 128k in GPT-4, and a vector database like Pinecone or ChromaDB represents the disk storage. The amount of storage available in our vector database can vary, but for our purposes let’s say infinity and beyond!

So, there’s this cool paper from UC Berkeley, dubbed MemGPT. These folks are rehashing an old-school OS idea for LLMs: virtual memory. It’s a slick move computers use when they’re short on physical memory, shuffling data between RAM and disk storage as needed.

In our GenAI world, the orchestrator LLM takes the wheel on memory management. It’s a smart conductor, knowing exactly what context is needed based on user actions. Seamlessly moving data between the main context window (RAM) and vector database (disk).

Even though we’re eyeing the future, we’ll still need some software 1.0 tools before completely leaving that world behind. But… over time I think these tools will gradually disappear.

In GenAI OS’s first version, we’re still going to lean on stuff like Python interpreters, calculators, terminals, and so on. The importance of tools like this is self-explanatory.

But what about GenAI OS versions 2 or 3? As hinted earlier, we’re already seeing a dip in our dependence on old-school software 1.0 tools. Like those whispers about OpenAI’s Q* cracking grade school math, or BLIP-2’s finding on LLMs communicating in some high-dimension space we humans can’t understand.

It’s tough to say when we’ll fully ditch software 1.0, but my guess? It might happen way sooner than we’re all thinking.

Now, let’s talk about getting online. Good news: we’ve already tackled this with ChatGPT, which has Bing search baked right in. Sure, there’s room to grow in terms of response speed, quality, and sourcing, but these are things that’ll likely get better as we go.

Last we have an interesting concept shared by Andrej in a recent video around a future LLM app store, similar to Apple or Google’s app store today. In our GenAI OS scenario, our orchestrator LLM will download smaller finetuned models from the LLM app store and work together to achieve tasks more efficiently.

Last up, there’s this neat idea from Andrej’s recent video about a future LLM app store, kind of like what Apple or Google have now. In our GenAI OS setup, the orchestrator LLM will grab smaller, fine-tuned models from this LLM app store, working with them to complete macro tasks (think autonomous agents).

This strategy, using fine-tuned LLMs, is a time-saver and cost-cutter, while amping up the response quality. For example, instead of just using GPT-4 for coding, we could pull Google’s AlphaCode 2 for even higher-quality code at a lower cost.

Now that we’ve scoped out our future GenAI OS architecture, I’ve got one last traditional vs. GenAI OS market comparison to share.

The evolution of closed and open GenAI OS

Similar to the traditional OS market we’ve observed a divide between the LLMs that will likely drive future GenAI OS’s.

In the GenAI OS realm, we’re seeing a split similar to the traditional OS market. The closed-source heavyweights include big names like OpenAI, Google, and Anthropic. What’s interesting is how the open-source LLM landscape mirrors the old-school setup. Just as Linux and Unix are foundations of many open-source OSs, Llama (by Meta) might anchor future open-source GenAI OSs, along with one or two others like Falcon, Mistral, etc.

That said, the meaning of ‘open-source’ is evolving, leaning towards less openness. Traditional open-source purists argue that true openness should encompass the codebase, dataset, and model, not just the model alone.

Securing the future GenAI OS

As I delved into this future GenAI OS concept, my security alarm bells were ringing non-stop. I couldn’t help but imagine all the risky scenarios of entrusting our personal and financial data to an orchestrator LLM at the heart of our OSs. But lucky for you, I’m a techno-optimist, not your usual security skeptic. I believe we’ll find ways to beef up these systems’ security.

To kick things off, I’ll first outline which traditional security measures could be adapted to our new GenAI OS world. Then, I’ll touch on some LLM-specific security strategies to consider.

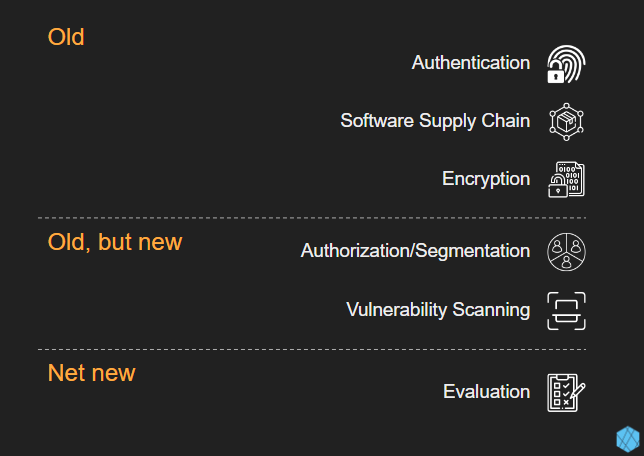

For this post, let’s keep it simple and categorize the security practices into three groups: Old, “old but new”, and net new.

Old

For our GenAI OS version 1, we’ll want to import some tried-and-true security measures like authentication, software supply chain (SSC) validation/verification, and encryption.

- Authentication: With voice and gestures as our main interaction modes, biometric authentication will be key. We’re talking voice, face, or fingerprint recognition here. I can already hear the security folks in the crowd screaming about how it’s possible to bypass these forms of authentication, especially voice (thanks to GenAI). But by integrating passkey and cutting-edge tech to sniff out fake biometrics, we’re heading towards solving these issues.

- Software supply chain (SSC): Our orchestrator LLM will rely on software 1.0 tools and finetuned LLMs from the LLM app store, both of which will be vulnerable to upstream attacks. The example for software 1.0 tools is clear, with companies facing constant SSC attacks. For LLMs, the challenge is tougher, given the nascent state of SSC security tools. A good starting point? Ensuring downloaded LLMs come from their claimed publishers (think SLSA for LLMs).

- Encryption: Considering that our GenAI OS’s vector database will store heaps of personal info (like social security numbers, health records, and financial details), encrypting this data whether at rest, in transit, or potentially even in use, is critical.

Old, but new

Moving on, let’s explore some traditional security practices reimagined with an LLM spin:

- Authorization/Segmentation: I’m grouping these, as they both aim to address different angles of a similar challenge. We have the dual LLM architecture and control tokens. Dual LLMs are all about splitting privileged and non-privileged LLMs. Privileged LLMs will have the ability to cause serious damage if taken over by an attacker, so all outside access is delegated to the non-privileged LLM (see more here). Control tokens, on the other hand, aim for a clear-cut separation at the token level between user inputs and system instructions. This is to ward off common prompt injection attacks. Think of it like “special tokens” in today’s terms, where you tell the LLM to sandwich specific types of info within set brackets (e.g., [CODE] … [/CODE]). It’s similar to how we separate user and kernel land in traditional OSs.

- Vulnerability scanning: Outside of traditional vulnerability scanning of software 1.0 tools, we’re witnessing an evolution in scanning tools specifically for LLMs. This includes emerging tools focused on testing LLMs against prompt injection attacks. Notable examples include Garak and PromptMap.

Net new

Finally, we have the net new security practices, here we’re mainly talking about LLM evaluations. I’ve written more on this topic in another post, so I’ll keep this concise. In a nutshell, using LLMs to evaluate other LLMs’ output is the most effective way defenders have found to ensure that the LLMs aren’t up to any mischief, leaking private info, or churning out vulnerable code.

Whew, that was a bit of a marathon post! I still have open-ended questions, but I’m curious, what do you think?! Is this all crazy talk? Can we realistically envision an OS revolving around LLMs? And what are the big hurdles that might stop us from reaching this future?

For all of you who have made it this far, thank you. I hope this gives you a clearer picture of what our future GenAI OS might entail, how it could operate, and the initial steps toward securing it.