OpenAI’s 2024 Decline: A Value Prediction

Don’t want to read? Then listen

This week’s post is inspired by a prediction Chamath made in a recent All-in podcast. He predicts a dip in OpenAI’s enterprise value in 2024. This isn’t a reflection on OpenAI, but rather an industry-wide shift. This view is contrary to what most people believe today.

Chamath’s prediction is rooted in his belief in capitalism. A key quote from this episode highlights this:

For the whole AI movement to be successful “it [AI] needs to be literally dirt cheap and as close to zero as possible, the minute that happens that [OpenAI/NVIDIA] revenue goes away”

There are three main assumptions…

- Model Quality: The quality of both closed source (GPT) and open source (Llama) models will continue to increase, but at different rates.

- Response time: Currently the response time from GPT-4 is slower than most consumers are willing to wait. This response time needs to decrease. Sidenote, I’ve personally noticed an improvement in GPT-4’s response times in the last month.

- Energy Cost: With energy prices falling over time, the cost of training these models is expected to decrease significantly.

These assumptions point to a market opportunity for arbitrage. Picture it as two balls rolling down separate slopes. On one slope, there are decreasing compute costs (covering energy, training, and inference). On the other, we have lowering response times.

👆This is capitalism at its finest 👆

Startups and the open-source community are poised to bridge the current gap through hard work and innovation.

Let’s look at this prediction in a bit more detail.

Response times and efficient computing

How long are consumers willing to wait for their machines to respond? Currently, Google says that a “good” server response time is 100 milliseconds.

Chamath, however, argues for much quicker AI interactions, suggesting response times should be around 30-50 milliseconds. That seems a bit extreme based on Google’s preference. But hey! Interacting with our future AI friends is a different experience, so lowering the response time could be what’s needed.

The current metrics used to evaluate an LLM’s response time vary, but the two most common I’ve come across are average time to first token and average time to last token. As you can see below, there’s a big gap, especially for GPT-4. In some cases, a 15x difference.

Exploring response times can also be done by testing a model’s speed over varying input lengths. For example, if I feed a model 500 words and it responds in 100ms, then feed it another 10k words, will it respond slower? The simple answer is yes. The response time will increase linearly, but the goal is to start with a lower millisecond response time to beat the competition. PromptHub completed a few good experiments in this area.

The question is, how long will these latency constraints remain a challenge? My guess is not very long, primarily due to two factors: more efficient models and chips. Let’s delve into the role of chips first.

The current buzz is around GPUs, but as AI data centers are built out, my bet is on chips specialized for training models. GPUs are good, but not great at training models, this is because they’re built for multiple functions like rendering graphics, editing video, etc. The specialized chips I’m referring to fall into two categories: ASICs and what I’m calling “monster chips”. ASICs are tailored for specific tasks like Bitcoin mining or model training. Our conversation will be focused on ASICs, but if you’re interested in “monster chips” listen to this podcast interview with the CEO of Cerebras.

Many might think that tech giants like Amazon, Microsoft, Meta, and Google will dominate the ASIC market, given their ongoing development of specialized AI chips. However, the AI chip market is competitive and expanding. So, it’s wise not to underestimate the potential of capable and ambitious startups in this arena.

Startups like Etched are going all in on creating ASICs dedicated to AI training. These ASICs are not only dedicated to training AI models but have the most popular architecture (transformers) burnt into their chips. This commitment has paid off with extremely fast inference.

Here’s a great podcast interview with one of the cofounders Gavin. Despite being only 21, he’s impressively knowledgeable and experienced in this field.

Since we’re so early in the AI revolution, I’m not sure I’d commit my entire startup to a single architecture such as the transformer. We’re already seeing new architectures like Mamba catching fire, but with that being said, Mamba seems to be complementary to transformers, not a replacement. For example, while transformers excel in short-term memory, they’re less adept at long-term memory, a gap Mamba seems to fill.

However, I’m also not a 21-year-old prodigy who’s already raised $5M for my startup, so take everything I say with a massive grain of salt. 😆

Small is beautiful

The trend in model size and efficiency is diverging into two distinct paths: “bigger is better” and “smaller is beautiful.” Currently, these approaches work well together and don’t directly compete.

The “bigger is better” philosophy is embodied by large foundational models like GPT-4, Llama 2, and Gemini Ultra. These behemoths, with trillions of parameters, require months of training and millions in computing costs.

Conversely, the “smaller is beautiful” movement focuses on small-language models (SLMs) like Phi-2, Orca 2, Mistral 7B, DeciLM-7B, Mixtral of Experts, and others. Many of these models are open-source and rival or even surpass GPT-3.5 and GPT-4 in certain benchmarks. They are notably cheaper, faster, and smaller. Companies like Apple are pushing research to ensure the inference fits onto our phones.

Let’s take a look at a more reliable source for comparing the quality of models like Chatbot Arena. As you’ll see GPT-4 is still the best, but a much smaller open-source model Mixtral of experts is not too far behind. Although a quality gap between closed-source and open-source models persists, the pace of improvement in both groups is similar, preventing the gap from widening further. Plus, if the adoption and quality of synthetic data improve that gap could shrink.

Energy costs

To wrap up this prediction, let’s focus on the falling energy costs.

We’ve discussed reducing training costs through more efficient chips and models. But what about energy?

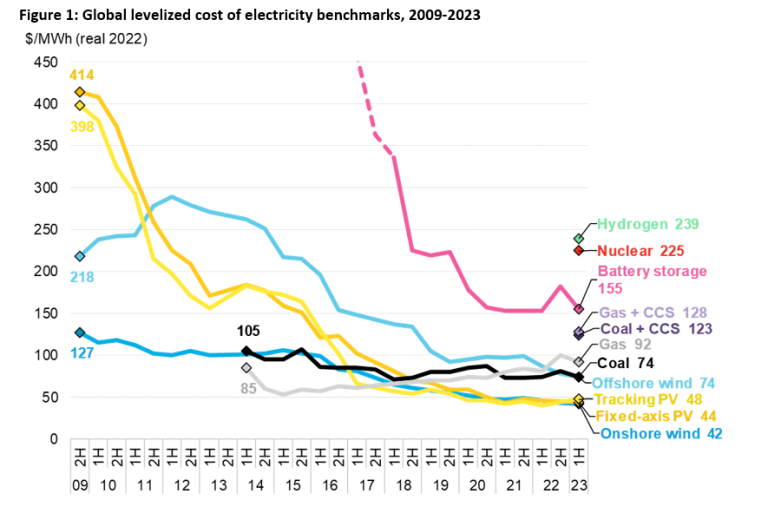

Over a long enough timescale, we’re seeing a downward trend in energy costs. This decrease is largely driven by renewable energy, but traditional forms of energy have been on the decline as well.

This decline is great, but it needs to continue. Remember our prediction is about 2024, I’m skeptical of the significance this cost reduction will have on training models in the short term. This is due to the increasing energy demands of AI-centric data centers.

The initial power demand for training AI is substantial and more concentrated compared to traditional data center applications. For instance…

“Initial power demand for training AI is high and is more concentrated than traditional data center applications. “A typical rack which consumes 30 kW to 40 kW, with AI processors, like NVIDIA Grace Hopper H100, it’s 2x-3x the power in the same rack, so we need new technology in the power converters,” (source)

When is enough, enough?

I’ll leave you with a thought to ponder: When is it enough?

For companies deploying these models, it often becomes apparent that solving client problems doesn’t always require a GPT-4 level model. A more specialized model that can consistently perform a specific task is usually enough. As open-source models improve, becoming more affordable and compact, they increasingly meet the ‘enough’ criteria for most companies.

The key question for companies is determining their ‘enough’ threshold. How do they know when they’ve reached it?

… And after saying all of that there’s still a chance OpenAI’s enterprise value continues to rise and there are two reasons why.

- Money: It’s never a sure thing when you’re betting against an organization that has aggregated most of the greatest AI engineering minds of our time.

- Magic: This aggregation will likely lead to more magical breakthroughs in AI. This could happen through the combination of existing architectures (transformer + Mamba) or some secret sauce OpenAI has cooking up in the background.